Making it easier than ever to move data between your data warehouse and Neo4j!

https://neo4j.com/developer-blog/introducing-neo4j-data-warehouse-connector/

Authors: @luke_gannon and @zach_blumenfeld

For any analytics solution to be useful, it must integrate into your data ecosystem, ideally in an easy and intuitive way. At Neo4j, ecosystem integration is a core product principle and we always strive to meet data engineers and data scientists where they are.

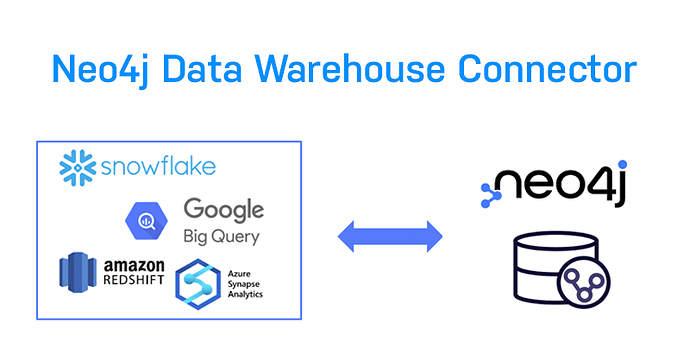

In this post, we will cover the new Neo4j Data Warehouse (DWH) Connector which we recently released to simplify interoperability between Neo4j, Spark, and popular data warehouse technologies.

For quite a while now, Neo4j has maintained an Apache Spark Connector that enables you to build Spark-based ETLs and other workflows with Neo4j. The Spark connector remains a good general purpose solution for batch transaction processing with Neo4j, particularly for those data engineers and data scientists already familiar with Spark. However, we noticed that many of these graph ETL workflows involve a common pattern of moving data between Data Warehouses and Neo4j. To make it even faster and easier to construct workflows with this pattern, we decided to add the DWH Connector to our portfolio which runs on top of Spark to provide a simple, high-level, API specifically for moving data between Neo4j and popular data warehouses.

The DWH connector works with Neo4j AuraDB and AuraDS, Neo4j Enterprise Edition (self-managed), and even Neo4j Community without limitations. This means all Neo4j Graph Data Science users will be able to leverage the DWH connector as well, since all database deployments are included.

Supported Data Warehouse & Technologies

Currently, the DWH connector supports both Spark 3.x and Spark 2.4 and allows you to interact with the following popular data warehouse technologies:

- Snowflake

- Google BigQuery

- Amazon RedShift

- Azure Synapse

You can leverage the DWH connector wherever you are running Spark whether that be Databricks, AWS EMR, Azure Synapse Analytics, Google Dataproc, or locally in your own environment.

How It Works

The DWH connector can be used to move data from a data warehouse to Neo4j or the other way around – from Neo4j to a data warehouse. It can be applied in 2 ways:

1. Using PySpark or Scala Spark

You can provide high-level configurations and run the DWH connector in either PySpark or Scala Spark. This can be easily leveraged in notebooks or other scripts and programs. Below is an example in PySpark for moving data from Snowflake to Neo4j.

2. Using Spark Submit

The Neo4j DWH Connector can be used with spark-submit out of the box. You just need to provide a json file with source and target database configurations.

Below is an example of using spark submit in the command line to move data from Snowflake to Neo4j. Notice that you use the provided Neo4jDWHConnector class.

The dwh_job_config.json file contains similar configurations to what we saw in PySpark above. Below is an example:

It is also possible to use the DWH connector jar to generate a template json configuration, a.k.a “configuration stub”. To review a full list of options you can use the following command:

java -jar neo4j-dwh-connector-.jar -h

For More Information

The best place to find out more about the DWH connector is the Github repository documentation. You can also drop by the Neo4j Community site to ask questions, or get in touch directly with your Neo4j representative.

Get Started Today!

To get started, you will need both the DWH Connector and Neo4j

There are multiple ways to get the DWH Connector

- Download it from the Neo4j Download Center.

- Include it with maven. The maven coordinates depend on the Spark version:

- For Spark 2.4 with Scala 2.11 and 2.12:

org.neo4j:neo4j-dwh-connector_:_for_spark_2.4 - For Spark 3.x with Scala 2.12 and 2.13:

org.neo4j:neo4j-dwh-connector_:_for_spark_3

At the time of this post, the current dwh-connector-version is 1.0.0.

- Build it locally from source as described here

If you do not already have Neo4j, there are a couple different deployment options.

For data science use cases, you can familiarize yourself with the DWH connector right away, and for free, using a Neo4j Sandbox. To scale up a bit more, you can get larger instances up and running in minutes using our fully managed graph data science service, AuraDS. Once you’re ready to go to production, please get in touch with us to help you find the right deployment option with the right SLAs and support for your use -case.

For application development, if you aren’t already an AuraDB Free user, you can sign up for AuraDB Free to get started right away.