I am working with a simple tab-delimited csv file that contains around 4 million rows. Creating all nodes and relationships takes around 1h, which seems to be too long and I am wondering where I can improve the speed. All Person nodes in the graph are unique (person is a 10-digit number). Each person can have ID number, phone number, email and zip code associated with address:

CREATE CONSTRAINT ON (person:Person) ASSERT person.idNumber IS UNIQUE;

CREATE CONSTRAINT ON (phone:Phone) ASSERT phone.phoneNumber IS UNIQUE;

CREATE CONSTRAINT ON (email:Email) ASSERT email.emailAddress IS UNIQUE;

CREATE INDEX ON :Person(dob);

CREATE INDEX ON :Person(time_stamp);

USING PERIODIC COMMIT 5000

LOAD CSV WITH HEADERS FROM 'file:///myfile.csv' AS row

FIELDTERMINATOR '\t'

MERGE (personl:Person {idNumber: row.idnumber, time_stamp: row.timestamp})

ON CREATE SET person.dob=row.birthday

ON MATCH SET person.dob=row.birthday

WITH row

WHERE row.phone IS NOT NULL

MERGE (phone:Phone { phoneNumber:row.phonenumber })

WITH row

WHERE row.email IS NOT NULL

MERGE (email:Email { emailAddress:row.email})

USING PERIODIC COMMIT 1000

LOAD CSV WITH HEADERS FROM 'file:///myfile.csv' AS row

FIELDTERMINATOR '\t'

MATCH (person:Person {idNumber: row.idnumber, time_stamp: row.time_stamp,dob:row.birthday})

MATCH (phone:Phone { phoneNumber:row.phonenumber})

MERGE (person)-[:HAS_PHONE]->(phone)

Am I doing something wrong? Can I parallelize node imports using APOC? All Person nodes are unique, but there are several rows the same phones/emails as well as rows with missing data.

Please format code + Cypher statements with the code </> icon, it's much easier to read.

Hey,

If you look at your first merge, you are including time_stamp in the key value:pair. It will scan the Person nodes looking for that combination, which is not indexed.

You can use EXPLAIN to see if you are doing node scans or index lookups.

There should only be ONE of each Person, Email or Phone in your graph, so not sure what is the purpose of Timestamp. It is the time of the last update? Then put it in the SET.

If you need to use idnumber and timestamp for Person create an index on both, not just :Person(time_stamp)

You don't need the ON CREATE SET and ON MATCH SET if both are the same, just do

The key value pair in the MATCH or MERGE should only be what is unique. If idnumber is unique, only use that, if idnumber and time_stamp is unique, then use both, but don't include attributes that aren't indexed.

I think the second loadcsv should not have the birthday, again, that combination isn't indexed so it will do a node scan.

And now I have that song from America stuck in my head, I went to the desert on a horse with no name...

Thank you for the reply! I changed the statements, but it still takes around 7-8min to create around 5 million relationships (around 5 million Person nodes to ~4 million Phone nodes). Is that expected? I can significantly improve node loading time when using APOC parallel loading, but that requires some post-processing since there are phones and other properties that repeat causing a NPE.

I am using time stamp for queries in order to filter records that occurred from to

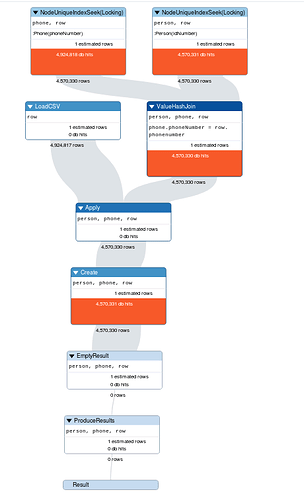

Here is the PROFILE output:

Looking at the logs I noticed Detected VM stop -the-world pause, so this might have something to do with tuning the neo4j configuration?

Can you expand that query plan? In the lower right hand corner of the result pane you can find a double-down-arrow button that will expand all elements of the plan.

I can see that ValueHashJoin is an eager operation, but I am not sure how to avoid it.