Hey everyone,

I am using neo4j community version 3.5.12 as of now.

Its running on a CentOs server machine version 7.7 with 29 GB of ram.

I currently have a graph that comprises of around 46k user nodes and 7k movie nodes along with 21 Genre nodes. Relationships count is at around 2.1 million

I have altered the following parameters in neo4j.config file :

dbms.memory.heap.initial_size=8024m

dbms.memory.heap.max_size=8024m

I have also created the indexes on movie_id, user_id and genre_name

Apart from the obvious relation between users and movies and movies and genre, i have also related each user with two genre nodes depending on their top genres.

So overall relationships count is around 2.1 million here

So, going with the above set-up, i plan to get the number of similar customers (or the actual nodes for similar customers) for each customer. I am using the below query for that.

Its taking forever to run (even when i am providing limit at the end).

Currently i am running it from browser but have tried the same using cypher-shell

match (u1:User) - [r1:top_2_genre_is*2] - (u2:User)

where u1.movies_count_85 >= 20

and u2.movies_count_85 >= u1.movies_count_85*0.5

and u1 <> u2

with u1.user_id as tc,u1.movies_count_85 as tc_count,u1.movies_rated_85 as movie_set,u2.user_id as sc,u2.movies_count_85 as sc_count,

algo.similarity.overlap(u1.movies_rated_85,u2.movies_rated_85) as similarity,

apoc.coll.intersection(u1.movies_rated_85,u2.movies_rated_85) as matching_movies,

count(1) as repeated

where similarity >= 0.75 and repeated = 2

return tc,count(sc) order by count(sc) desc limit 100

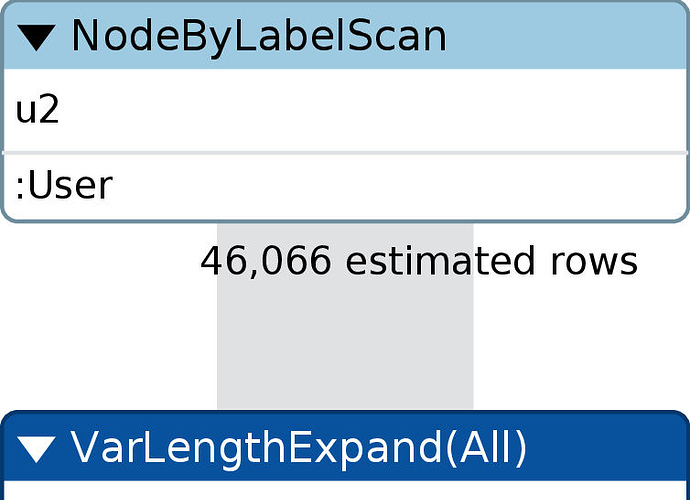

I am attaching the explain plan for the same :

I tried modifying my query for checking the credibility of it, and also to see the DB hits it was going for when doing only for single user (being u1 in the above query) .

profile match (u1:User{user_id : 1}) - [r1:top_2_genre_is*2] - (u2:User)

where u2.movies_count_85 >= u1.movies_count_85*0.5

and u1 <> u2

with u1.user_id as tc,u1.movies_count_85 as tc_count,u1.movies_rated_85 as movie_set,u2.user_id as sc,u2.movies_count_85 as sc_count,

algo.similarity.overlap(u1.movies_rated_85,u2.movies_rated_85) as similarity,

apoc.coll.intersection(u1.movies_rated_85,u2.movies_rated_85) as matching_movies, count(1) as repeated

where similarity >= 0.75 and repeated = 2

return tc,count(sc) order by count(sc) desc

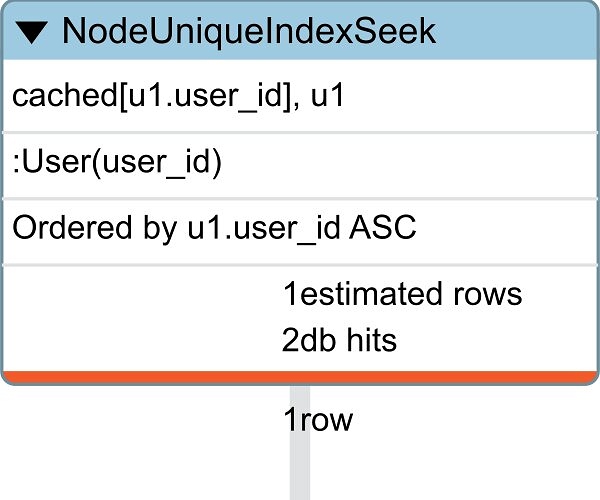

I am attaching the profile plan for this one :

Its clear that the DB hits are quite high even for a single user calculation.

But, i need to run this whole thing for all the users.

I can't seem to find any alternative approach to this method,

or is there anything i need to configure from my DB side to make this current query faster.

Thanks for any insight.