Hi all,

today's topic is about my attempt to extract as many short paths as possible. I am using a http connection via python.

The database has a fixed amount of nodes (~50k) but massively many parallel edges. For that reason, I needed to index edge properties and therefore chose edges to be nodes as well.

We have

(node {id:a}) - [onto] -> (edge {time, weight}] - [onto] -> (node {id:b})

There are then around 250k nodes, but a billion+ relationships. Many "edges" are parallel, and I need to retain that: I'd like to be able to aggregate in different manners.

What I need to do is run this extremely simple query: Essentially getting the neighbor nodes, given the particular structure that allows me to index on edge variables.

For example, I often need to find paths where the edge has an (indexed) time parameter:

MATCH p=(a:node)-[:onto]->(r:edge {time:$time})-[:onto]->(b:node {id :$id})

RETURN b.id AS sender,a.id AS receiver, SUM(r.weight) AS agg_weight order by receiver

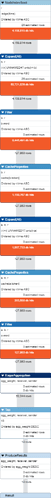

Here is the profile. I am not sure if this can be improved

In practice I discard low-weighted paths so the actual degree is lower.

It is still shockingly high, of course.

As the network grows, I will try to induce more sparsity (and redo the current network accordingly). However, the problem - in principle - will persist.

So I wonder if I can improve upon this query.

I notice that it subsets all edge-nodes in parallel to the id. Is there maybe a way to do this only for edge nodes actually connected to the focal node?

Sure, this query is fine for one node. The issue becomes that I need to do this for many, many id's.

So my first attempt is to simply pass a list and unwind

UNWIND $ids AS id

MATCH p=(a:node)-[:onto]->(r:edge {time:$time})-[:onto]->(b:node {id :$id})

RETURN b.id AS sender,a.id AS receiver, SUM(r.weight) AS agg_weight order by receiver

This takes for a good number of id's about 17 minutes. That's not ideal.

The plan also looks weird. It seems that nowhere is any index search for the node Ids in question.

Again, I get that one may want to subset edge-nodes as well. But only a small number of edge-nodes should be required, and they are all connected to the focal nodes I pass with the id argument...

I can, by the way, batch the ids as I like. I can even do a sequence of single requests if that would be faster somehow.

In any case, one idea is to use apoc and mapparallel2. This seems to work and give the same results a bit faster around 3 minutes compared to 17.

However, before doing that, I'd prefer to ensure that my query can not be improved otherwise.

Is there any better way than unwind in this case?

Given that apoc is so much faster, it almost seems like there's an issue there.

Any other ideas?

It is purely reading data, no manipulation of any sort.

I have 196GB of RAM, settings are

dbms.memory.heap.initial_size=32G

dbms.memory.heap.max_size=128G

dbms.memory.pagecache.size=96G

Thank you for any insights.

Edit:

I ran the following query instead:

MATCH p=(a:node)-[:onto]->(r:edge {time:$time})-[:onto]->(b:node)

WHERE b.id in $ids

RETURN b.id AS sender,a.id AS receiver, SUM(r.weight) AS agg_weight order by receiver

This was much faster

On a smaller subset:

WHERE: 22,016 seconds

APOC: 2,3 minutes

UNWIND: 15+ minutes

Here is its profile

Is this legit?

This is even faster than the sum of individual calls.

How can this be so much faster?

There must be some mistake here, but it seems to return the same values.