.

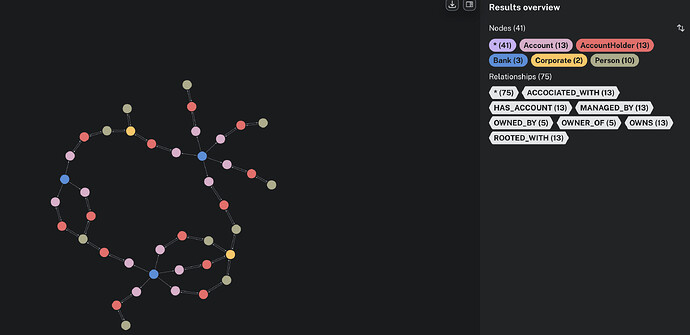

Hi All, with Neo4J suppose tone very dynamic, accommodating, semi structured... implying for one node type one record/instance should be able to say the first 5 properties and a 2nd should be able to have say 8....

With the below type of Kafka Connect Sink, it sort of breaks that...

Was thinking one option is to keep the "compulsory" properties at say the root level and then have a value property thats a doc thats dynamic,

Curious to hear how others have worked around this, made it work, made it dynamic enough, but still be able to handle edges. sort of assume a edge will only be shown if the values required on both sides are present between the nodes, if one node does not have the source value then it's simply ignored when the edges/links present on the node shown.

... the below code/sink, something is still broken, can't get it working... so my stream for now is broken.

curl -X POST http://localhost:8083/connectors \

-H "Content-Type: application/json" \

-d '{

"name": "neo4j-accountHolder-node-sink",

"config": {

"connector.class": "org.neo4j.connectors.kafka.sink.Neo4jConnector",

"topics": "ob_account_holders,ib_account_holders",

"neo4j.server.uri": "bolt://neo4j:7687",

"neo4j.authentication.basic.username": "neo4j",

"neo4j.authentication.basic.password": "dbpassword",

"neo4j.topic.cypher.ob_account_holders": "CREATE (a:AccountHolder {accountEntityId: event.accountEntityId, bicfi: event.bicfi, accountId: event.accountId, tenantId: event.tenantId, accountAgentId: event.accountAgentId, fullName: event.fullName}) ON DUPLICATE KEY IGNORE",

"neo4j.topic.cypher.ib_account_holders": "CREATE (a:AccountHolder {accountEntityId: event.accountEntityId, bicfi: event.bicfi, accountId: event.accountId, tenantId: event.tenantId, accountAgentId: event.accountAgentId, fullName: event.fullName}) ON DUPLICATE KEY IGNORE",

"key.converter": "org.apache.kafka.connect.storage.StringConverter",

"value.converter": "org.apache.kafka.connect.json.JsonConverter",

"neo4j.batch.timeout.msecs": 5000,

"neo4j.retry.backoff.msecs": 3000,

"neo4j.retry.max.attemps": "5",

"tasks.max": "2",

"neo4j.batch.size": 1000,

"value.converter.schemas.enable": false

}

}'